Introduction

Paper: Not Just Prompt Engineering: A Taxonomy for Human-LLM Interaction Modes.

Web: Human Llm Interactive App.

Overall view

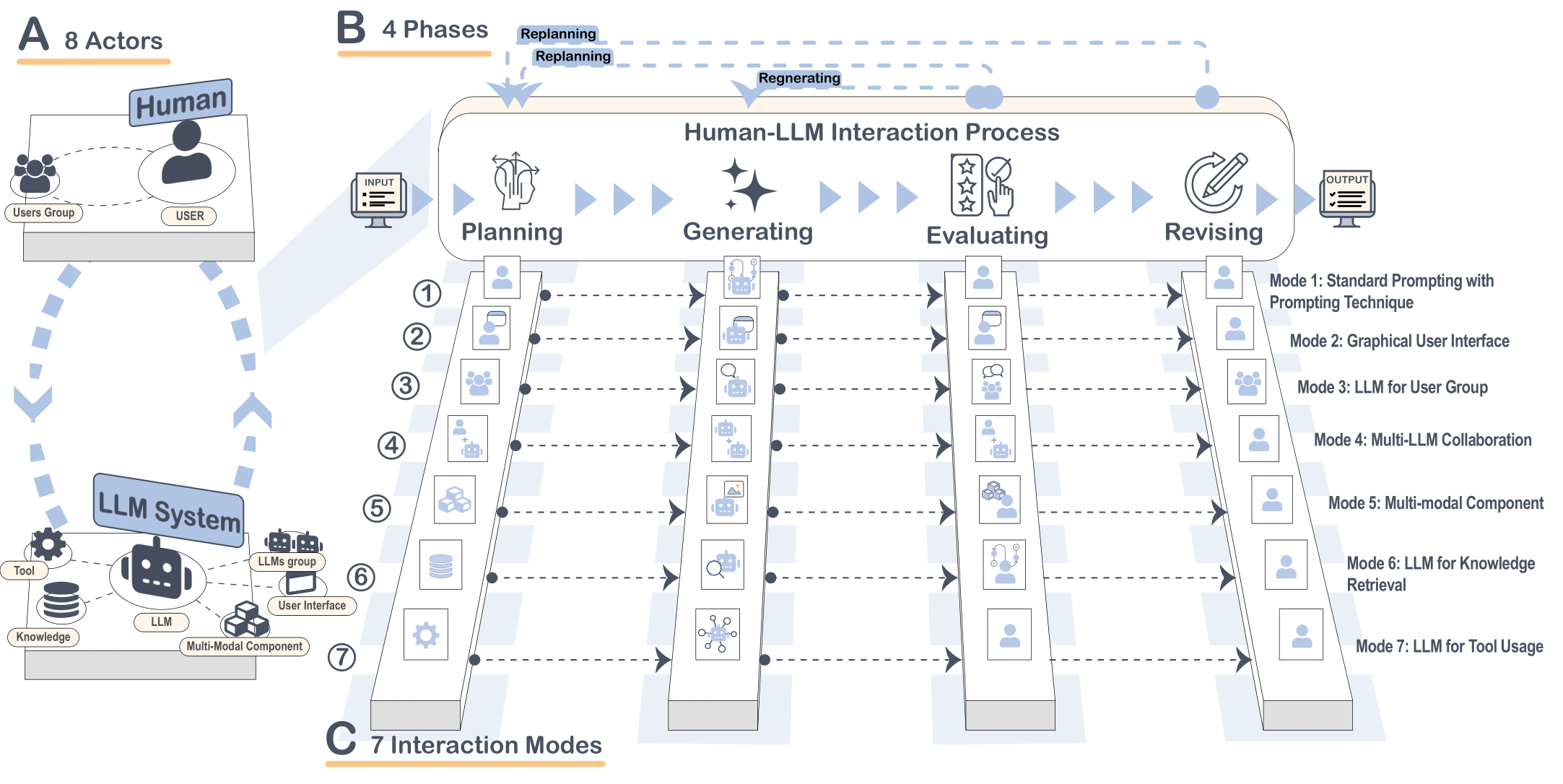

Figure: This taxonomy includes 7 main interaction modes and 23 sub-interaction modes. Across all interaction modes, there are four main phases involving various actors, including the user, user group, knowledge base, user interface, LLM group, multi-modal component, and external tools. These actors operate within each phase to facilitate the entire process of human-LLM interaction across various types.

From Teletypes to Conversations

Every use of computers involves an interaction mode — a pattern of interaction between the user and the computer. This concept has evolved significantly, starting from command-line interfaces on early teletypes, advancing to the direct manipulation of on-screen images, and progressing to engaging conversations with chatbots, among others.

The Rise of Prompt Engineering: Unlocking LLM Potential

Since the introduction of Large Language Models (LLMs), especially ChatGPT, conversational interactions have become the "default" interaction mode for the interaction between human users and LLMs. This extends to other notable platforms like Claude, Gemini, and Llama 2, among others.

This interaction is based on prompts—specific instructions given to an LLM that allow it to grasp the user's intent and then generate meaningful outcomes through dialogue.

Multiple ways have been used to utilize the full capabilities of LLMs. First of all, prompt engineering—including techniques like zero-shot, few-shot, and chain-of-thought—enables the completion of complex tasks, such as solving mathematical problems, writing, and coding. Furthermore, researchers have identified a series of prompt patterns, similar to software design patterns, that can be employed to construct complex prompts. For example, the "flipped interaction" pattern, where LLMs initiate questions instead of merely producing outputs; and the "infinite generation pattern," enabling continuous output generation without repeated user prompts.

However, text-based conversational prompting has limitations, particularly in handling larger chunks of text data or performing complex, multi-step tasks such as data analysis, where initial data pre-processing must be followed by generating a series of reports.

To address these limitations, HCI researchers have explored diverse human-LLM interaction designs to develop systems that expand the boundaries of conversational capabilities in LLMs. A key strategy involves applying various HCI techniques, such as visual programming and direct manipulation, to create LLM-powered systems that integrate a series of prompts.

Why Develop a Taxonomy Framework?

However, no comprehensive framework currently exists that encompasses diverse interaction perspectives to serve as design references, similar to biology's phylogenetic trees or software design patterns in software engineering.

Our goal with this taxonomy is to provide a theoretical foundation that can be utilized as a reference tool for LLM system designers and developers to: 1) quickly understand what interaction modes they can use and relevant dimensions they should consider when using a specific mode, and 2) create new interaction modes through combinations of the raw interaction modes we identified.

To achieve our goal, we performed a systematic review of major AI and HCI venues published since 2021, involving an analysis of 1009 papers, with 345 papers specifically examined for interaction modes. Through this literature review, we identified seven types of interaction modes (prompting, UI, user group, LLM team, multimodal, knowledge, and tool) for the human-LLM interaction process and their basic building blocks. These include eight key actors (human, LLM, interface, database, group, modal, multi-LLM, and gear) and four key phases (plan, generate, evaluate, and revise).

We anticipate that these interaction modes will become crucial across various tasks where prompts act as the primary mechanism driving system functionality.