Mode 2: Prompting with User Interface (UI)

Definition

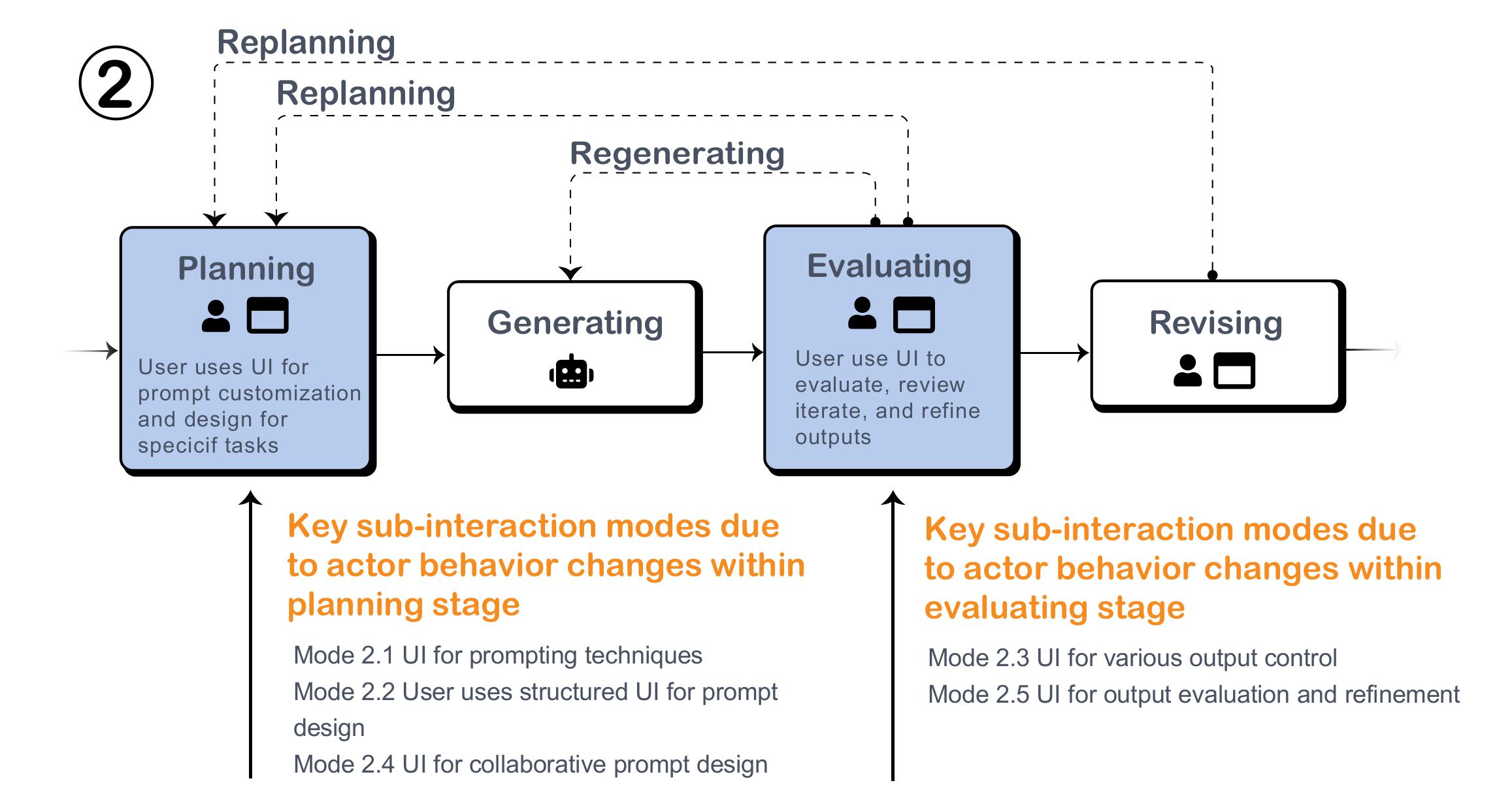

This approach can improve prompts provided to LLMs by introducing an additional element—the UI—into the process. This UI can play a role in both the planning and evaluation stages. For example, during the planning stage, the UI can assist users in formulating prompts. In the evaluation stage, the UI can help users assess previously proposed alternatives for comparison.

Figure: How UIs shape behaviors during the Planning and Evaluating stages.

Key Interaction Modes and Examples

User uses UI to support prompting techniques.

-

Reasoning. Through direct manipulation of intermediate steps in the reasoning chain, users can generate content with higher levels of abstraction or pursue specific directions for deeper exploration. For instance, visual programming techniques—such as prompt chain design—can be utilized.

-

Multiple prompts. UI design is employed to facilitate the testing of various prompt variations within an interaction flow. This capability is particularly useful for quickly prototyping complex artifacts, such as using multiple prompts to experiment with and refine their creations with different prompts, inputs, and models. One example is DesignAID, which generates multiple prompts based on one prompt input, and users can see the prompts and their corresponding generated image output. This UI supports the automatic generation of multiple prompts. Another example is VISAR, which allows visual programming to give users control over the framework of argumentative writing. It facilitates rapid prototyping of prompt ideas, enabling quick testing of writing organization. For each case, users can select from multiple system-generated responses.

-

Role Play.

-

Knowledge framework. such as usability heuristics.

User uses structured UI to design prompt.

This approach enhances LLM prompt inputs through a structured UI. For example, different components of a prompt—such as task descriptions, specific constraints, output requirements, and few-shot examples—can be input and assembled through a user interface. One such example is DiaryMate, where users seek suggestions from an LLM that can customize outputs by using an interface that allows the input of keywords, along with a slider to adjust preferences between "typical sentences" and "unique sentences."

User uses UI for various output format control.

UI design can further enhance LLM outputs by providing users with options to specify output formats and controls. These include selecting the size, picking the color, or choosing button layouts via the output interface. The aim is to enable LLMs to produce results that are not only more functional but also more complex, structured, and layered, providing greater depth and utility in the generated content. A typical example is GenLine, developed by Jiang et al., which enables users to generate CSS styles, such as button-style HTML code, and offers an interface for users to choose whether to accept the generated style. A variation of this tool is GenForm, which facilitates the structured generation of mixed outputs, including HTML, JavaScript, and CSS code, through a form interface.

User uses UI for Collaborative Prompt Design.

In many cases, multiple users collaborate to design prompts rather than working individually. For example, some systems provide UI support for collaborative editing of natural language commands in code, or enabling asynchronous collaborative writing using a shared UI interface.

UI for LLM output refinement and evaluation.

UI design can significantly improve the iterative aspects of an interaction flow, incorporating features like debugging, error labeling, regenerating, and self-repairing. Such UI enhancements allow users to refine their original interactions, leading to improved final or intermediate outputs.